|

The project is based on 3 stages: · Calibration: In this stage, we find all the parameters which are required for the algorithm. We find both internal parameters of the cameras and external parameters which relates the different cameras CS. · Filming a scene: In this stage we’re taking a picture in all 3 cameras and preparing DB for the fusion stage. · Fusion: final stage, we take the data generated from the 2 distance maps and use fusion algorithm in-order to make an optimal final distance map.

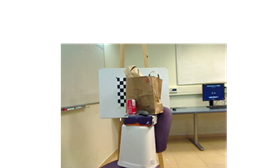

Images of our Setup:

Calibration Stage: In the calibration stage, we take several images of a black-white chessboard and use it for finding the following parameters: 1. Stereo cameras Focal Length. 2. The setup’s base-line - the distance between the stereo cameras. In addition, we generate 2 spate LUTs (Look Up Tables) in order to be able to transform the image from the ToF camera to the stereo-left camera CS. The 2 LUTs are: 1. Photometric LUT: Required for fixing internal ToF camera noise which causes different colors to be identified as different distances in a planar image. 2. Geometric LUT: Required for transforming the image from the stereo CS to the stereo-left CS. For example: Original ToF image:

After Refinements:

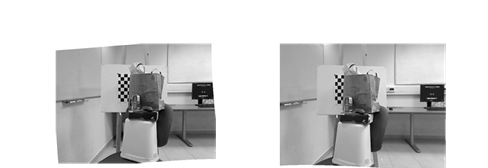

Finally, we rectify the stereo images. The rectifying process transforms both images in such way so each 2 corresponding pixels are at the same line in both images. For example: Original stereo images:

After Rectification:

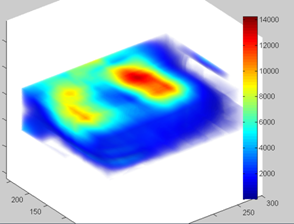

Scene Photographing Stage: In this stage we take a picture of a scene in all 3 cameras. We put the ToF image through all of the refinement and transformation processes. Finally, we create DB in which we’ll use in the fusion process.

This DB called data-term, and can be graphically presented in the following graph:

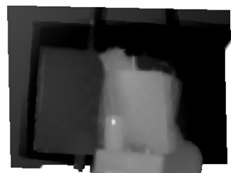

Fusion stage: In the fusion stage, we use an iterative algorithm called “Belief Propagation” in order to find the optimal distance map, using the data found in the previous stages. In each iteration, each pixel “sends a message” to it’s neighbors with data about the distance. After all messages were analyzed, each pixel has a value of energy. After each iteration, we’re calculating the total energy of the image and go to the next iteration. The algorithm converge in such way that the image total energy reduces along with the iterations progress.

Results: It is possible to see that the algorithm does improves the distance map comparing to the maps provided by each of the methods alone. We can see that we still have the high distance resolution as in the stereo-interpolation method. In addition, the unicolor areas are also mapped better than in the stereo-interpolation method alone.

|

|

Original Scene |

|

Distance map using Stereo interpolation |

|

Distance map using ToF Camera |

|

Distance map After Fusion |