|

Camera Path Reconstruction |

|

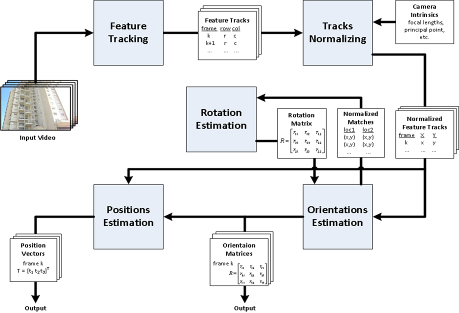

Solution Outline

The outline of our solution is presented in the following figure:

The Feature Tracking unit is responsible for detecting and following features (interest points) in the input video. It outputs a list where each entry corresponds to a different feature track, and specifies the track’s location across the video frames. From this point onwards, the algorithm does not directly use the input video, only the detected feature tracks.

Tracks Normalizing unit normalizes the feature tracks, i.e. calibrates the feature locations. It is based on the capturing camera’s intrinsic parameters, which have been measured a-priori. The output of Tracks Normalizing is identical to that of Feature Tracking, except for the coordinates being calibrated.

The next unit coming into play is Orientations Estimation. According to the normalized feature tracks, it: · Decides which frame pairs will have their relative rotation estimated by Rotation Estimation unit. · Accumulates the estimated rotations into global estimations of the camera orientations. The output of Orientations Estimation is a list, where each entry corresponds to a different frame and specifies the estimated camera orientation at that frame.

Rotation Estimation unit serves as a slave for Orientations Estimation. It receives from the latter a list of normalized feature correspondences between two views, and accordingly estimates the relative rotation.

Positions Estimation unit receives the list of camera orientations from Orientations Estimation, and the list of normalized feature tracks from Tracks Normalizing. Accordingly, it estimates the camera positions, outputting a list where each entry corresponds to a different video frame, specifying the estimated camera position at that frame.

Altogether, the algorithm receives an input video from a camera with known intrinsic parameters, and outputs two lists: one with estimated camera orientations throughout the video, and the second with estimated camera positions throughout the video. |