Collision recognition from a video

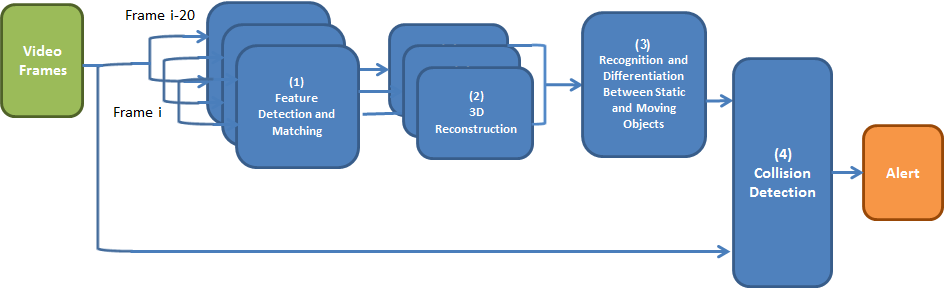

System Outline

The system takes a video from a camera, with an angle to the direction of the movement.

The system divides the movie to windows of time (~2.5 seconds each), then looks at pairs of frames a second apart.

For each such pair of frames, we reconstruct the 3D world, using the following main components:

- (1) Feature Detection and Matching - where we find interest points and match them between the two frames. This stage was implemented using ASIFT.

- (2) 3D Reconstruction - where we reconstruct the 3D world based on the two frames. This was done using standard techniques.

After we have gathered enough reconstructions for a time window, we do the following:

- (3) Recognition and Diffrentiation Between Static and Moving Objects - where we match the reconstructions for each point, and differentiate the moving points from the static ones based on their covariance matrix and on the epipolar error.

- (4) Collision Detection - where we estimate whether the dynamic points are moving towards us, using their scattering in the reconstructions as the metric.

Finally, if the fourth stage has consistently decided that there might be a collision, an alert is sounded.