PROJECT GOALS

Translation of user motion into meaningful gestures consists from several main tasks: Data acquisition, space transformation, recognition algorithm and matching to known gestures. While the first two tasks require mostly technical approach, the algorithm and database search and organization provide wide field for research and improvement. Various ideas for solving those tasks were already proposed and described. The ultimate solution is yet to be discovered. Our project goal is to merge several known methods to produce a more precise and fast algorithm or propose a custom way for processing real time input. The requirements from the final algorithm are: ability to process real time input, fast calculation speed, accurate matching, user flexibility and storage efficiency.

Algorithm description

After examining all the previous methods we decided to approach the problem from different angle. Instead of trying to find a mathematical function that describes the input in some way, we tried to follow the pattern and to identify the intention. The guiding line for that approach was the fact that what the human can identify and classify with no problem can rarely be described as some simple mathematical entity that computer can understand. We wanted to program the computer to recognize the input in a way of “thinking of it” as what it “looks like” and not what it really is.

LINE DETECTION

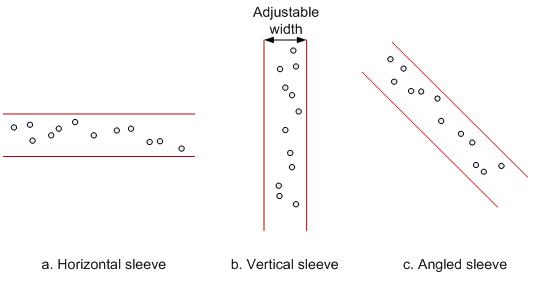

Our approach is based on building boundaries around the input and classifies it by following those boundaries.

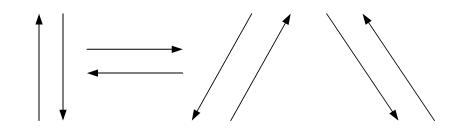

Whenever the input exceeds the current boundary, the current line type is stored and new boundary is created. Input is interpreted as valid only after a certain physical length, that way all the points around the starting position are ignored. This method allows us to detect lines going in every direction. After examining a wide variety of inputs we came to conclusion that there is no point in distinguishing between different types of diagonal lines, since that would make matching very unreliable. In addition when human being is creating continuous gesture in space it is very hard for him to create distinct lines of 30°, 45° or 60° inclination, leave alone repeat the same inclination each time. As a result we decided to limit our algorithm detection capabilities to a general diagonal line, storing only its direction, thus lines going from left-top to right-bottom and its inverse, are not the same.

To conclude, the algorithm currently detects horizontal, vertical and diagonal lines with respect to their direction, 8 line types total.

CIRCLE DETECTION

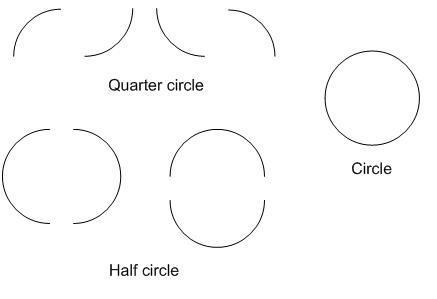

Circle detection is running in parallel to Line detection algorithm, and tries to find circles in the same input data that is transferred to line detection algorithm. The algorithm itself is based on Taubin G. [3] publication. This algorithm’s output is the best circle fit that can be achieved with points provided. To decide whether the fit is relevant at all, a sanity check is performed to prevent confusing slightly curved line with circle. After the data is initially classified as circle, we make a finer classification to decide which part of circle was drawn. We decided that the smallest valid part of circle that can be fitted reliably enough is quarter of circle, and this is the smallest shape available for detection by our code.

To conclude, the algorithm currently detects quarter of a circle, half and whole circle with respect to position of detected part on a circle. For example we can distinguish between left-top quarter and right-bottom quarter. In total 9 different circle parts are detectable.

PARAMETERIZATION

The whole implementation is based on parameters that define basic behavior like width of sleeves or number of points to ignore at the beginning of new shape detection. This was done to allow user customization of the algorithms. By asking the user to draw some predefined shapes, like horizontal and vertical line or centered circle; those parameters can be extracted and tailored to meet new user style of movement. Since the test group was limited to two person, we did not see any differences between our input style, that is the reason for the fact that interface for this type of customization was not implemented. In our opinion the implementation of this feature could be useful if the system is to be tested on a larger group.

DATABASE SEARCH

Since the approach of the project was to detect gestures during the input process, the method we think would be appropriate is storing the data in inverse order and searching the database for gesture in the same manner. Meaning that during the gesture recognition process, the first part of gesture that would be checked against the database is the last one detected by the algorithm. This would provide us with means of elimination of excess movement at the beginning of continuous human motion. Since the system is not practical for a large number of gestures and not for very complicated ones, there is no need in sophisticated database structure since the search load will be negligible and can be done in parallel (on the fly) with the gesture entry.