Yoav Y. Schechner: Research

Cross-Modal Denoising

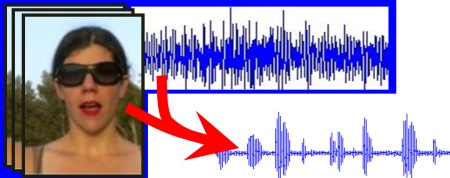

Widespread current cameras are part of multisensory systems with an integrated computer (smartphones). Computer vision thus starts evolving to cross-modal sensing, where vision and other sensors cooperate. This exists in humans and animals, reflecting nature, where visual events are often accompanied with sounds. Can vision assist in denoising another modality? As a case study, we demonstrate this principle by using video to denoise audio. Unimodal (audio-only) denoising is very difficult when the noise source is non-stationary, complex (e.g., another speaker or music in the background), strong and not individually accessible in any modality (unseen). Cross-modal association can help: a clear video can direct the audio estimator. We show this using an example-based approach. A training movie having clear audio provides cross-modal examples. In testing, cross-modal input segments having noisy audio rely on the examples for denoising. The video channel drives the search for relevant training examples. We demonstrate this in speech and music experiments.

Publications

- D. Segev, Y. Y. Schechner and M. Elad, `` Example-based cross-modal denoising'', Proc. IEEE CVPR - Computer Vision and Pattern Recognition (2012).

- D. Segev, Y. Y. Schechner and M. Elad, `` Supplementary Document'', CCIT Report 810 EE 1767 (2012).

- "Example-based cross-modal denoising: Supplementary demos." Easy to navigate html-based pages, to watch and hear the results.

- "Cross-modal (audio visual) denoising" (10 Mb, PowerPoint)

- The PowerPoint presentation linked above includes links to movies and audio files. These media files are ziped together in file Media for audio-visual presentation (15 Mb, ZIP). Place all these files in the same directory that you place the PowerPoint presentation.